Ralf Stapelfeldt recently criticized Nick Bostrom’s idea of Superintelligence by asking the question, “Can there be a dumb Superintelligence?1” Stapelfeldt noted that, after defining Superintelligence as “a machine [that] is clearly more intelligent than even the smartest humans combined,” Bostrom says such a machine might respond to the request to make paperclips by using its Superintelligence to turn the whole universe into paperclips, humans included. According to Stapelfeldt, human intelligence “is characterized by the ability to pursue multiple goals in a highly complex world and to weigh trade-offs when goals conflict with each other.” Thus, if such an AI that makes paperclips endlessly is superintelligent, in Bostrom’s sense, then it is also dumb by Stapelfeldt’s definition of human intelligence.

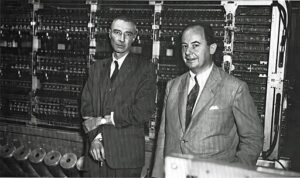

J. Robert Oppenheimer, by most standards, was not only intelligent, he was probably a genius. Working alongside him developing the atomic bomb were other extremely intelligent men, such as Ernest Lawrence, Richard Feynman, Enrico Fermi and John von Neumann, the person described by Fermi as “the smartest man alive.” They pursued their work developing the most destructive weapon in the history of man with a single-mindedness (and a large amount of denial) that, for most of them, obliterated any thought regarding the immense and widespread consequences of what they were doing. Some had reservations, but the majority cheered when the bomb worked and only thought about the real-world consequences after they had killed over a hundred thousand Japanese civilians. While working on the bomb, most of them failed to “weigh the tradeoffs” of a successful bomb, even one that ended WWII, with what its existence would mean in terms of increasing the risk to civilization in the future.

Making a bomb and making paperclips are not the same thing, but the scientists, mathematicians, and technicians who worked on the first atomic bomb, much less the politicians who later made the decision to use it, failed to think of the long-term consequences of what they were doing, although a few did, and several of them had regrets afterward. Similarly, the Russians who copied that first atomic bomb, then developed their own hydrogen bomb, were single-mindedly hell-bent on catching up with and then defeating the West in the “nuclear arms race.” They, too, failed to weigh the consequences of what they were doing. Andrei Sakharov, the ‘father of the Soviet hydrogen bomb” was blind to the consequences of his actions until later when he came to condemn them. Stanley Kubrick’s film, “Dr. Strangelove, or How I Stopped Worrying and Learned to Love the Bomb” portrayed this blind mindset memorably.

Single-minded pursuit of destructive goals by brilliant scientists is not confined to the creation of nuclear weapons. The “genius” John von Neumann claimed the game theory Prisoner Dilemma Game “proved” that, if both sides owned nuclear weapons, one side would use them, despite mutual restraint being the best option. He recommended that we mount a first-strike nuclear attack on Russia before it could manufacture enough bombs to achieve parity with the U.S., famously saying, “If you say why not bomb them tomorrow, I say why not today? If you say today at 5 o’clock, I say why not one o’clock?” It’s fair to say that, despite his massive intelligence, von Neumann’s absorption in his own game theory, combined with his distrust of the Soviet Union after it established domination over his native Hungary, blinded him to the ramifications of his recommendations. Thankfully, no one followed his advice, although he was a member of the Research and Development Board, United States Department of Defense and a Consultant to the Armed Forces Special Weapons Project at the time, and later, was a Commissioner of the U.S. Atomic Energy Commission.

Superintelligence of the kind described by Bostrom may be dumb, but that may not reflect a difference from intelligent humans. Continuing to pursue the use of fossil fuels in the face of global warming is dumb, as is building more or upgrading our nuclear weapons, or sharing them with other countries, but we do it. A government commitment to reining in China’s ability to produce and sell solar panels so that the U.S. producers can capture more of the market, when the net result would be to reduce the use of solar energy worldwide, is dumb, but we are doing it. Insisting on the unfettered right to buy and carry guns in the country that leads the developed world in gun-related deaths is dumb, but we do it.

Stapelfeldt has given us a valid portrayal of the kind of Superintelligence described by Nick Bostrom, and rightly labeled it as “dumb.” That would be a telling critique of the concept, except that It appears that a situation in which impressive intelligence goes hand in hand with stupidity is not uncommon. So, like, Oppenheimer, a superintelligent AI might look out over its destruction, an empty universe except for an almost infinite supply of paperclips, its final target the only thing left standing, itself, and, like Oppenheimer, wax poetic, saying “I am become death, the destroyer of worlds,” or, in a final electronic flash of insight, say, simply, “Oh, crap. What was I thinking?”

- Stapelfeldt, R. (2021). Can there be a dumb Superintelligence? A critical look at Bostrom’s notion of Superintelligence. Academia Letters, Article 2076. https://doi.org/10.20935/AL2076

Read a novel about Oppenheimer, von Neumann, Soviet spy, Klaus Fuchs, Andrei Sakharov and how close the U.S. came to starting a “preventive” nuclear war against Russia. Was Oppenheimer himself a spy? Based on recently declassified CIA documents, Prisoner’s Dilemma: The Deadliest Game by Casey Dorman is an edge-of-your-seat thriller. Available on Amazon in Kindle and paperback!

Can we build a “friendly” Superintelligent AI that’s not a risk to humanity? We can try, but… Read Casey Dorman’s exciting sci-fi thriller, Ezekiel’s Brain.

Available in paperback and Kindle editions

Rather listen than read? Download the audio version of Ezekiel’s Brain from Audible.

Subscribe to Casey Dorman’s Newsletter. Click HERE

I have issue that you call out certain political narratives as “dumb.” This is YOUR opinion, but is easily argued with conviction on the other side. It is not a universal understanding, and therefore not considered super intelligence. Like, for say, inalienable rights-a universal understanding but not a lived out narrative. Your main argument is intriguing and deserves the attention and debate, versus the red herring attempt in your article. But, I continue…The Nuclear Scientists’ sphere of thought about the creation of the bomb was pure intellectualism. They were breaking down the world of atoms and understanding the interaction, creating an interaction that could be a weapon and a tool (“clean” energy.) I argue, their job was not the morality of their studies and science. It was pure intellectualism. Should it have been? I would argue yes. I do think there should be a moral standard to science and knowledge. People with power of mind, money and leadership have that burden. Just because we can, doesn’t mean we should. Because we are human, with flaws, emotions, and individuality. Hence the importance of leaders who are intelligent AND moral. But as we know, we have leadership without morals, and morals without intelligence, and and intelligence without leadership and morality. One human does not have the capabilities to be super intelligent. As a collective we can try to achieve that, (history tells us that we do not), but we will never be able to compete against the AI. Dare I say, who is the judge of super intelligence? But AI, although capable super intelligence, does not have the capabilities of morality. Where does the leave us? Exactly where we are…the necessity of human and machine to work together, and hopefully for the benefit of continuing and bettering civilization.

I’m not surprised that you disagree with my examples of dumb human decisions. I agree that one human doesn’t have the capacity to be super intelligent, especially since Bostrom’s concept of Superintelligence is that it means being as smart as many combined human intelligences. A single human can be extremely intelligent, and, perhaps a group of them, as in the group at Los Alamos could be super intelligent, although Bottom also is talking about an AI that is designed by another AI so it is beyond what humans could achieve in any form, whether in a group or not. The point I’m making is that the dumbness of the Superintelligent AI is not different than the dumbness often shown by an individual intelligent human or a group of intelligent humans, in this case the Los Alamos group. It is difficult to apply morality to science, but there are two points to be made: 1) the goals of scientists are not necessarily the goals of science. They did no basic science at Los Alamos, they essentially applied known science to the problem of designing a weapon. That is not a scientific goal, it’s an engineering goal, like designing a plane that flies or an X-ray machine that takes 3-D pictures. Science would not have been set back if they had refused to use their scientific knowledge to build a bomb. What they do with the scientific knowledge they have is something to which standards of morality can be applied. 2) there are other ways that morality can apply to science, and that is in the methods they use to conduct their studies. Dr. Mengele was a scientist who was studying genetics and his basic science questions he was trying to answer may not have been immoral (or moral, for that matter), but his methods of using Jewish children whose bodies he manipulated in often painful or even lethal ways was immoral. That kind of standard has also been applied to using human fetal cells from aborted fetuses in stem cell research, which some people think is immoral. I don’t agree, but I think it’s a legitimate moral issue that can be debated.