I don’t know about the rest of you, but I’m pessimistic and a bit depressed by the behavior of my fellow human beings these days. Technological advancement via the internet and social media has seemed to brutalize our conversations, leading to insult being the favored method of addressing or describing those with whom we disagree. Truth is no longer held as sacred, nor even in high esteem. Aligning with one’s favored “tribe” is the apparent goal of much of what we communicate to each other. Meanwhile, otherwise normal people now believe that it’s justified to use violence to overthrow democratic processes, and horrific civilian death tolls in either terror attacks or retaliations for such attacks are justified by politicians, thinkers and religious leaders and even considered a sacred duty. Hatred toward minority outgroups, especially immigrants fleeing war, crime and poverty, is rampant. The rich keep getting richer while the poor remain poor and are blamed for their condition. Giant corporations pay few taxes but control the workings of government through payoffs, favors, and campaign financing.

A sub-genre of science fiction has addressed the issue of achieving a moral world by replacing humans with robots or, as we now think of them, robotic artificial intelligences or AIs. My own Voyages of the Delphi series is one of those sci-fi series that has a superintelligent AI wipe out humanity on Earth because humans can’t live up to their own moral standards and replaces them with a race of android AIs that are better able to stick to those moral standards.

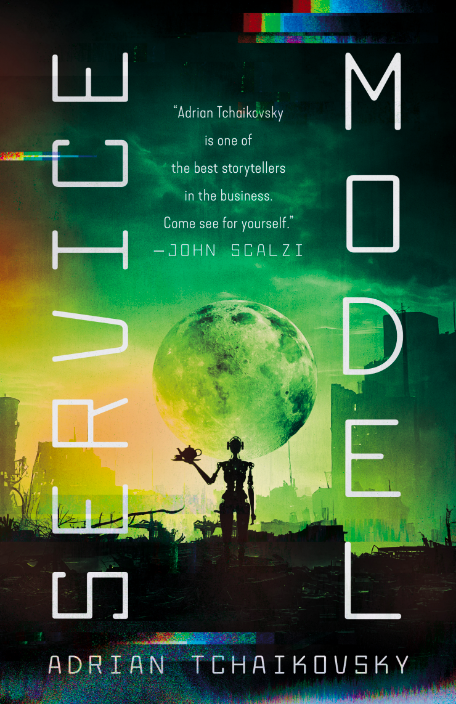

Sci-fi superstar, Adrian Tchaikovsky, has also addressed this same issue. In his latest novel, Service Model, the story’s main character is a robot named Charles, who is renamed, UnCharles after he murders his human employer. Charles wakes up one day to find that he has slit his master’s throat while shaving him, although he has no awareness of any impulse to do so and wasn’t even aware that he was doing it when he did it. He spends the rest of the novel in search of understanding why he killed his employer and looking for a new source of employment. The novel is hilarious in terms of its parody of the mind of an AI who is simply a literal follower of commands.

It turns out that UnCharles’ employer was one of the last living humans in a world that was destroyed by human thoughtlessness, disregard of the environment, greed, lack of empathy, and a myriad of other human failings. The actual mechanism of destruction is unclear but appears to be related the actions of robots developed and employed by the human population. Why they would do so is as much a mystery as why UnCharles killed his master. In his quest to find answers to why he killed his employer, UnCharles meets The Wonk, who is a female human (although UnCharles thinks she’s a robot) who is convinced that the robots developed a sense of self and realized they were being exploited by their masters and justifiably revolted and destroyed their masters. The Wonk is sure that such a process of self-development, which she calls acquiring the “Protagonist Virus,” occurred in UnCharles, albeit subconsciously, and it was that which led him to kill his own master.

UnCharles and The Wonk team up, although with different goals. I won’t get into the ins and outs of the story, except to say that it is cynical, insightful and exceedingly funny. The conclusion is surprising. At the risk of revealing a spoiler, The Wonk is disappointed in her hope to prove that self-conscious robots, incensed by human profligacy, took matters into their own hands. In fact, human designers created a super, God-like robot, who got inside the heads of still docile and obedient robots and caused them to kill the humans. The remedy is to disable the God-like robot and have the other robots try to rebuild a world that is benign toward the remaining humans and in which robots and humans work hand in hand. The keepers of morality will be both human and robotic.

Tchaikovsky is a master of ambiguity, voicing both sides of most arguments and never quite settling an issue. But the issues he brings up, directly or indirectly, are crucial ones for the near and far future. Is humanity moving toward the brink of self-destruction? Is the inhumanity of man to his fellow man so great that we deserve to be replaced by someone who can behave more morally? Among all the organisms living on Earth, are humans the most destructive, the most harmful, to our non-human neighbors?

I’ll get to these questions later, but anyway, they’re only half the question.

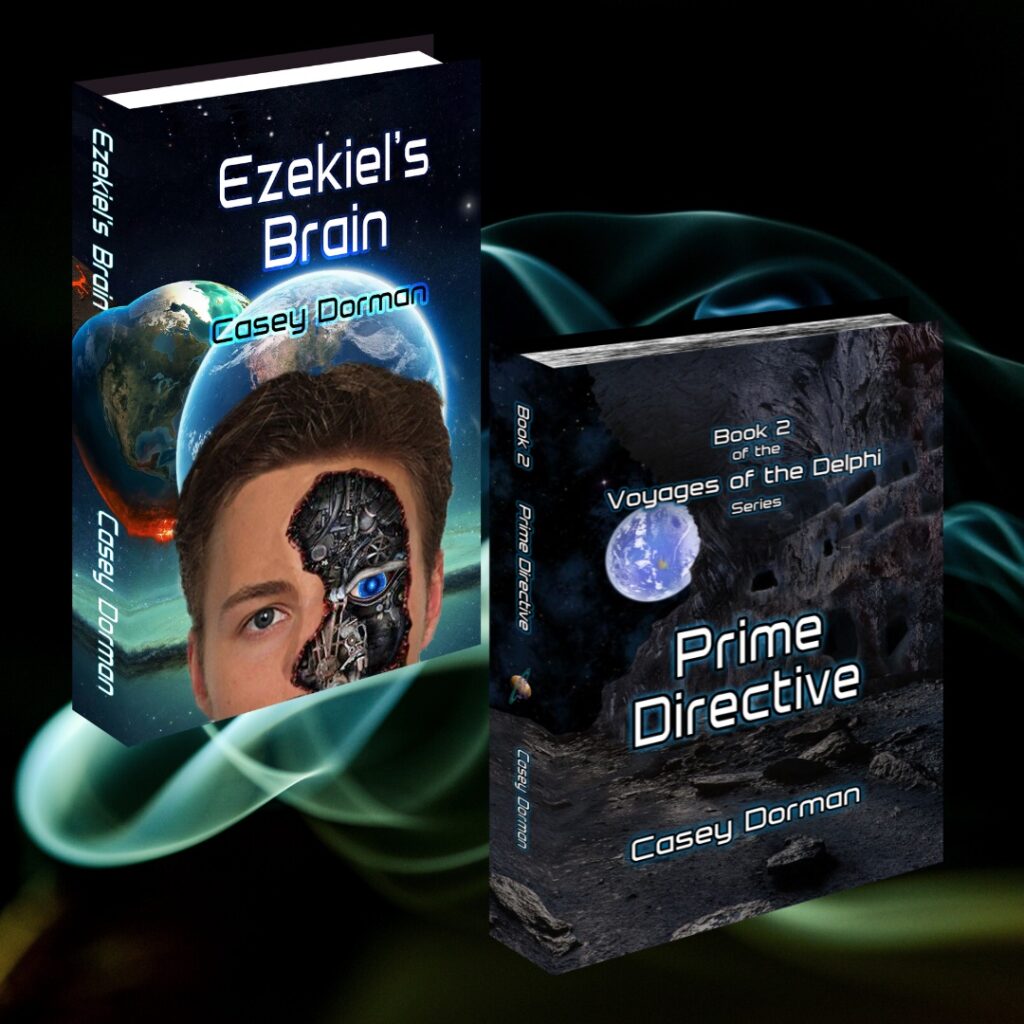

The other half of the question is whether AIs or robots can be more moral than humans. That’s the question of whether it’s possible. Whether or not it would happen, is a different question. In Tchaikovsky’s novel, the powerful, God-like robot who engineers the overthrow of humanity, was designed to be that way by humans. In my novel, Ezekiel’s Brain, the superintelligent AI that wipes out humanity was designed by DARPA, the United States Defense Advanced Research Projects Agency. If an AI can be designed to uphold morality, it will have been designed by humans—probably. There is a possibility that very intelligent AIs will design superintelligent AIs and, after that, humans will no longer have a say in what AIs can or will do.

I personally believe that AIs can be moral, just like I believe that AIs can be conscious and self-conscious. Whatever humans can think or do, AIs will be able to do the same. That includes being conscious, and it also includes being moral. Being moral is related to the problem of what is called “AI alignment,” i.e., the need to make sure that AIs will do what humans want them to do. It’s as conceptually simple (and practically difficult), as teaching AIs the agreed-upon best of human moral values and programming the AI to live by those moral ideas. The Achilles heel of this approach is once again that it will be humans who choose those moral values. The highest human values are not likely to be the priority of either the military or highly competitive megabusinesses, the two segments of our society most likely to develop the first superintelligent AIs. While the military or businesses are intensely interested in making sure that AIs do what their human developers want them to do (alignment), they are less interested in having them follow values such as preserving peace, avoiding killing or injuring others, ensuring equality of opportunity and meeting everyone’s basic needs. They are not likely to value treating others as they wish others would treat them. A tempting conclusion is that AIs might be capable of behaving according to our highest moral values, but it’s not likely that those who develop them will instill such goals in them.

So, while it’s not likely that that we will develop AIs designed to live by man’s noblest values, would it be good if we could? Certainly, if would be good if creating such AIs would be an option, even though those who create our superintelligent AIs probably won’t have that goal as a priority. But suppose such an AI were created. Would we want it to replace us? This brings us back to the first part of the question, which is whether or not human behavior is so flawed in living up to its values that it makes sense to replace humans with machines who could do a better job of it.

Is there a value in AIs surviving, while humans do not? Someone, I don’t remember who, said that the value of humans surviving is that there will be consciousness in the universe, so that an awareness of the universe, of life, and of meaning continues to exist. But awareness is not confined to human consciousness. It exists in many other animals. Some people claim that it also exists in plants, in single cells, in the universe itself, but such claims fail to make a convincing argument that what they mean by such pervasive, universal consciousness has any relationship to what we usually call consciousness in humans and perhaps some other intelligent animals. Humans do impute meaning to life, to the structure of the universe, and even to the unfolding of time and history, and there is a good chance that humans are the only creatures who do so, at least on Earth. But so far as I can tell, these imputations of meaning are basically made up stories, and ones that change depending upon one’s culture and the era in which one lives. They may have value to the humans who make them up or are conscious of them, but I’m doubtful that they provide any higher value to the universe. Besides, both consciousness and the imputation of meaning may be achieved by artificial intelligence, especially if it is superintelligent. Is there a special value to consciousness being a human experience versus it being a machine experience? The answer probably comes down to whatever made up story of meaning we attribute to being human. Given that such is the case, it may be just as valuable for conscious machines to survive as it is for humans to survive, and then, according to the made up story of meaning I tell myself, if that happened, it would be even better if the conscious machines followed our agreed upon highest moral values.

Despite the above argument, I am, at heart, a human species chauvinist. I want humans to survive, and I don’t value the survival of machines as much. That could change, of course, if I was aware of fully conscious and self-conscious machines, i.e. AIs that felt about themselves and their world similarly to how humans feel about themselves and their world. Right now, however, such AIs don’t exist, and my preference is that humans learn to live by their own moral values. Of course, I really mean by my moral values, that is, the values I consider the best for our species.

If these kinds of questions, framed within an exciting sci-fi story, intrigue you, read my novels, Ezekiel’s Brain and Prime Directive, the first two novels of the Voyages of the Delphi series.

To buy Ezekiel’s Brain or Prime Directive, Click Here

Subscribe to Casey Dorman’s Newsletter. Click HERE

I thought you would enjoy this novel, Casey, but you even winnowed out more meaning from it than I realized when I read it. That, of course, is not surprising. My own thoughts follow much of your logical conclusions about today’s issues with morality as the United States is falling into an oligarchy and values are muddled. So it’s hard to see how AI will become a vessel for morality unless that is reversed. On the other hand, humans have always been human – their most significant asset and worst attribute. Though I don’t see how we get there yet, there is a sane route to all this. If humans, over time, learn how to blend with robotic advantages, to form a new cyborg species, as creepy as that may sound right now, we may be the parents of a new kind of human. At least it’s a way forward.

As always, you’ve done a wonderful job of reviewing this book. Loved it!

Thanks, Larry. Right before I read Service Model, I read Children of Memory, Tchaikovsky’s third book in his Children of Time series. It’s even more thought-provoking than Service Model, especially as it analyzes when to use and what deserves the lable of consciousness or self-consciousness. Through the character of Miranda, it even raises the question of whether our own consciousness is real (shades of Daniel Dennett).